Ring Buffers For Realtime Video Recording

Ring Buffers for Realtime Video Recording

When working with real-time computer vision applications, one of the most challenging problems is managing continuous data streams efficiently. Sounds boring, right? Well, it’s the difference between a working system and junk.

Imagine you’re building a system to detect when an elderly person falls from their bed. You may have a perfectly accurate fall detector, but here’s the catch: by the time your algorithm recognizes the fall and triggers the recording, the crucial frames that characterize the fall have already passed by.

This creates a fundamental problem: we need to capture not just the moment of detection, but also the sequence of events leading up to it. Why? Because caregivers need visual context to understand what happened, and researchers need complete event sequences to improve their models. The question becomes: how do you store the “before” when you only know it matters “after”?

This isn’t just an elder care problem. Self-driving cars need to record the moments leading up to sudden braking events or near-misses. Robots working alongside humans need to capture the sequence when something goes wrong during manipulation tasks. Augmented reality systems need context when hand tracking fails or objects disappear. Security systems need the buildup to detected intrusions. Manufacturing lines need the lead-up to quality control failures. The pattern is everywhere in computer vision: the interesting stuff happens before you know it’s interesting.

Why Ring Buffers for Video Recording?

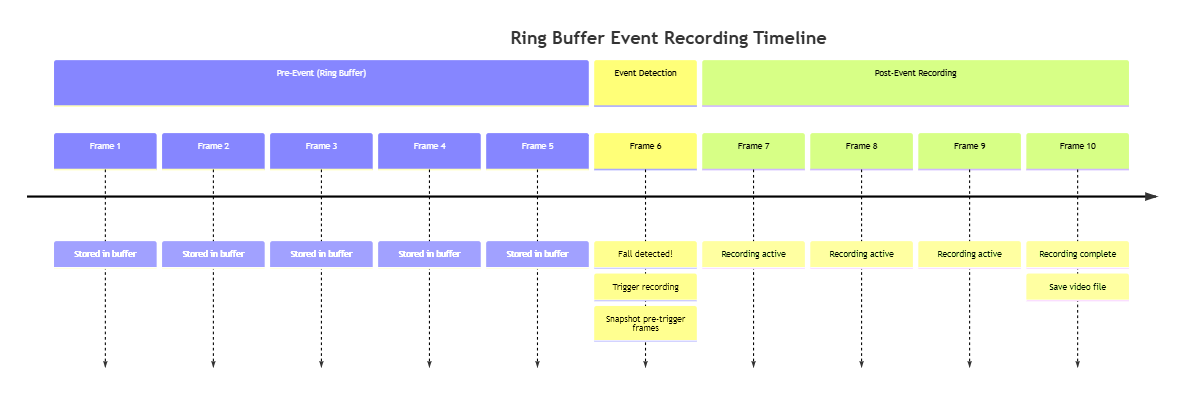

The key insight is that video events don’t announce themselves beforehand. Ring buffers solve the fundamental problem of capturing pre-event context when you only know it matters post-event. In our elder care scenario, we need both the fall sequence leading up to the detection and the aftermath, a dual-phase recording that captures pre-trigger + post-trigger frames.

This is where video applications differ from typical ring buffer uses. You’re not just maintaining a rolling window of recent data but you’re maintaining a recording system that can retroactively decide what was important. The critical advantage for video applications is predictable memory usage regardless of how long the system runs, crucial when dealing with frames arriving at 25Hz or higher.

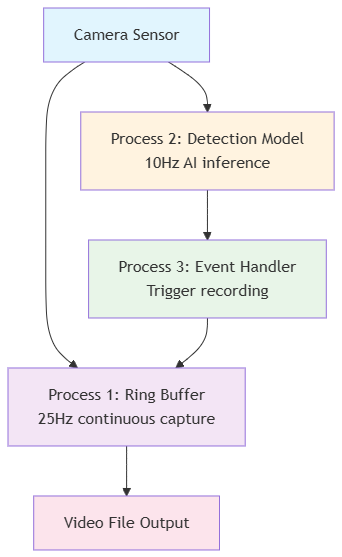

Decoupling Recording from Detection

Your ring buffer recording should run as a separate thread or service from your detection pipeline. Detection and recording have fundamentally different performance requirements.

Recording Requirements:

- Must capture every single frame (lossless)

- Needs to run at full sensor frequency (25Hz in our example)

- Simple, fast operations only

Detection Requirements:

- Can afford to skip frames

- Computationally intensive deep learning models

- May run at reduced frequency (10Hz) due to processing constraints

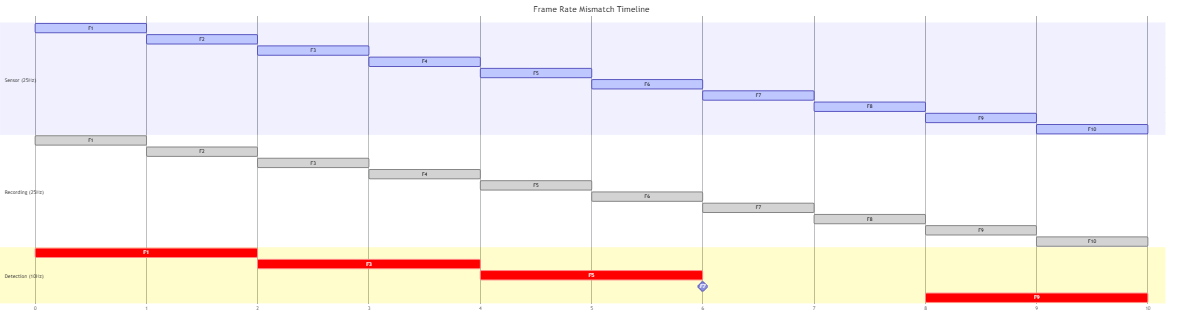

Handling Frame Rate Mismatches

In edge AI scenarios, this separation becomes even more important. Your fall detection model might be so compute-intensive that it can only process every 2nd or 3rd frame:

The Problem: If your detection algorithm running at 10Hz detects a fall at frame F7, the crucial frames F1-F6 might have already been discarded by a naive recording system.

The Solution: Your ring buffer, running independently at 25Hz, has preserved all frames. When the detection triggers, you have the complete sequence.

Edge AI Implementation Considerations

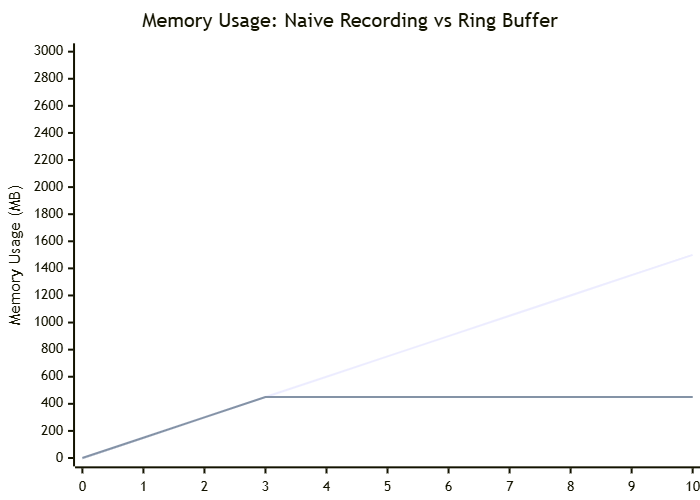

Memory Constraints: Edge devices have limited RAM. Calculate your buffer size carefully:

- Frame size: 1920×1080×3 bytes = ~6MB per frame

- 75 frames (3 seconds at 25Hz) = ~450MB

The key advantage of ring buffers becomes clear when you compare memory usage over time:

Processing Separation:

Power Management: The ring buffer thread should be lightweight, just memory copies. Save the heavy lifting (AI inference) for the detection thread that benefit from NPU/GPU processors.

Why This Architecture Works

When your 10Hz detection algorithm finally recognizes a fall, it doesn’t matter that it “missed” frames during processing. The ring buffer thread, running faithfully at 25Hz, captured everything. You get:

- Complete event context (despite detection lag)

- No frame loss (recording runs independently)

- Efficient resource usage (detection can run slower)

- Scalable performance (adjust detection frequency based on hardware)

This separation is what makes ring buffers so powerful for real-time applications, they bridge the gap between continuous data streams and intermittent processing capabilities.

Implementation Challenges & Solutions

Building this architecture in practice involves several critical challenges. Here there are some snippets of my solution that may help you understand how to implement this:

Inter-Process Communication

# Our camera works as a zeromq pub. We connect to it.

context = zmq.Context()

frame_socket = context.socket(zmq.SUB)

frame_socket.connect(f"tcp://localhost:{zmq_port}")

# Our detector is passed a messaging queue. Old n dirty message enqueing would do the trick for us to create the video.

trigger_queue = multiprocessing.Queue()

Handling Trigger Overlapping

def handle_trigger(self, trigger):

if trigger == "get_video":

# Ignore overlapping triggers. Once we detected a fall, there is a chance the elder is still on the ground.

if self._is_recording:

self.logger.info("Already recording. New trigger ignored.")

return

# Start new recording session

self._is_recording = True

self._capture_pre_trigger_snapshot()

Automatic Recording Termination

def check_recording_complete(self):

# Condition 1: Enough time after trigger. We want to record some time after the detection occurs.

enough_post_frames = (

self.post_trigger_count >= (self.seconds_after_trigger * self.fps)

)

# Condition 2: Minimum total duration met

total_frames = len(self.pre_trigger_snapshot) + len(self.post_trigger_frames)

enough_total_duration = (

total_frames >= (self.min_length_seconds * self.fps)

)

return enough_post_frames and enough_total_duration

Queue Management Best Practices

# In trigger detection process

try:

trigger_queue.put("get_video", block=False)

except queue.Full:

logger.warning("Trigger queue full - dropping trigger")

# In recording process

try:

trigger = trigger_queue.get(timeout=0.1)

self.handle_trigger(trigger)

except queue.Empty:

continue # Normal operation

Some takeaways

- Separate concerns: Frame capture, detection, and recording should be independent processes

- Choose communication wisely: ZeroMQ for data, multiprocessing.Queue for control

- Handle edge cases: Overlapping triggers, memory limits, process failures

This architecture helps the critical recording function to remain responsive even when AI detection models struggle under computational load, exactly what you need for reliable edge AI deployment.

Final thoughts

So here’s the thing nobody talks about enough: building production computer vision systems is way harder than just getting your model to work. All those Medium articles? They’re garbage. They show you how to get 99% accuracy on CIFAR-10 and call it a day.

Look, I’ve learned this the hard way: you can have the most accurate fall detection model in the world, but if you can’t handle the video stream properly, it’s just useless code. The same goes for autonomous vehicles that can’t record near-miss incidents, robots that lose manipulation context, or AR systems that can’t debug tracking failures. The gap between “my model works in Jupyter” and “my model works in the real world” is huge, whether that’s an elderly care device, a Tesla, or a factory robot.

Edge AI makes this even worse. You can’t just rent bigger servers when your elderly care device needs to run on a Jetson Nano with 4GB of RAM, or when your autonomous vehicle needs real-time processing in a moving car, or when your AR headset has strict power and thermal constraints. Suddenly you’re dealing with:

- Memory constraints that force you to think about every single frame

- Concurrent programming (which, let’s be honest, most of us avoided in school)

- Real-time requirements where “close enough” isn’t good enough

- Hardware limitations that make your beautiful architecture crumble

And guess what saves your ass? Ring buffers. Not transformer models, not the latest PyTorch release. A basic computer science concept from the 1970s.

Stop obsessing over model architectures and learn the systems side. You will have time to make those better but at the end of the day, reliability trumps accuracy when you’re dealing with real-world applications. Your 99.9% accurate model is worthless if it crashes every 10 minutes.

The ring buffer is just one piece of the puzzle, but it’s a perfect example of how old-school computer science concepts still matter. Sometimes the most important part of your system is the part that just quietly does its job in the background.

Don’t get me wrong, the fancy AI models are important too. Better architectures, smarter training techniques, more efficient inference, that stuff matters. But it only matters if you can actually deploy it reliably. We’ll explore both sides in future posts: the boring infrastructure that makes things work and the cutting-edge AI that makes them smart.

Whether you’re building elder care systems, autonomous vehicles, collaborative robots, or AR applications, the engineering challenges are remarkably similar. Ring buffers for event capture, process separation for reliability, memory management for edge deployment. The computer vision domain changes, but the fundamental systems engineering remains the same. Master these patterns once, and you can apply them everywhere.

Comments